Note: this article is an introduction to several leading scientific theories, written as an easy overview and not intended to be a comprehensive list.

We are undergoing a conceptual ‘paradigm shift’ in science. Science in the 20th and early 21st centuries has made incredible advances, but the populations’ understanding of science has still been constrained with classical mechanistic and reductionist thinking premised on outmoded axiomatic formalism. Science is on the verge of a major revolution in thought – that the universe is dynamic, nonlinear, complex, creative, self-developing, and self-organizing to higher levels of energy and complexity.

Revolutions in science begin with scientists discovering new data or phenomena which generate a conceptual hypothesis of new theories. When these new theories challenge old paradigms and bring forward a shift in new thinking, there is a fundamental change in systems and methods. New approaches to understanding what scientists would never have considered valid before.

I have been fascinated by the leading theories in science for most of my life. I built my career in finance on Wall Street by studying emergent science and technology’s impact on finance, business, and markets. My college majors in biology, philosophy, and economics helped me visualize whole integrated systems and identify emergent singularities transforming a system. I became a ‘go-to-guy’ on economic feasibility and competitive positioning strategies for numerous companies with product innovations. I helped many companies obtain venture capital and issue a public stock offering. To stay current I read the latest articles, attended scientific conferences, and worked with many scientists.

Many people, including some educators, scientists, and mathematicians, still cling to out-moded assumptions of: Newtonian/Maxwellian classical mechanics; Euclidean/Cartesian notions of space/time; Aristotelian/Empiricist/Kantian logical formalism; entropic universe; behaviorism; and anti-science ignorance and superstition. As well, many people believe the bankrupt assumptions of Malthusian notions of limited resources and limits to human growth.

Human survival depends on scientific progress. There are no limits to human growth. For millions of years, the human species has developed new technological breakthroughs to solve the fundamental problems facing our existence. From food gathering, to complex hunting, to agriculture, to civilizations, to industrial development, to the nuclear and digital age, the human race has always solved problems, and always will. As a species, we are on the verge of the greatest social-economic-technological revolution in history – in the next few decades we will develop unlimited fusion energy, space colonization, quantum computers, nanotechnology, anti-matter energy, artificial intelligence, synthetic biology, solve many diseases, and much more.

To ensure our own future, science and art education must be adequately funded to expand the population’s creative abilities and knowledge. Awareness of critical scientific concepts is necessary to develop a culture for current and future generations of thinkers and problem solvers to meet the new issues we will face.

Complex Adaptive Systems

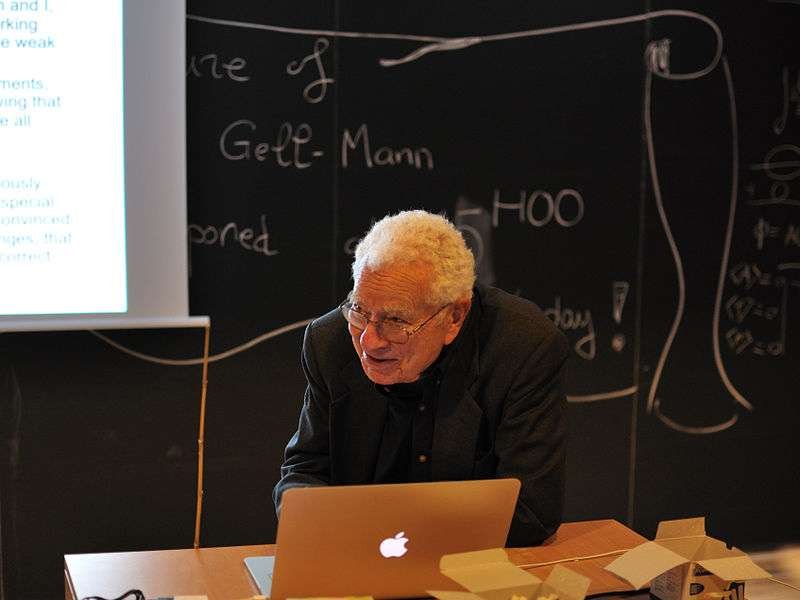

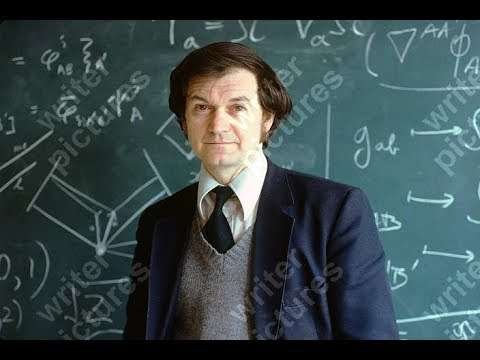

Murray Gell-Mann

According to Steven Hawking, complexity will be the science of the 21st century. Complexity theory is revolutionary in showing how the universe is creative, dynamic, and self-developing – it is not an entropic running down universe but is anti-entropic, developing from lower states of energy density to higher states of energy density and throughput, creating free energy. Complex adaptive systems are about non-linear, open dynamic, self-developing adaptive systems, such as evolution, biosphere, climate, neural system, economy, markets, society, solar system, galaxy, biology, physics, geology, chemistry, and so on.

Complex systems are composed of components that interact with each other in self formed networks where distinct properties arise from these relationships. The systems are self-organizing from internal interactions and interactions with the external environment. Complex systems are non-linear where a change of the output is not proportional to the change of the input. Another feature is emergent behaviors that are not apparent from its components, such as the system moving from simple states to higher ordered systems of complexity and energy density.

The Santa Fe Institute is a private research institute dedicated to the multidisciplinary study of complex adaptive systems, including physical, computational, biological, neural, and social-economic systems. It was formed in 1984 by Los Alamos National Labs scientists (Georg Cowen, David Pines, Nick Metropolis, Herb Anderson, Peter Carruthers, and Richard Slansky), Nobel laureate Murray Gell-Mann, biologist Stuart Kaufmann, and others, to disseminate new interdisciplinary research in adaptive complex systems.

String Theory Multiverse

String theory is a hypothetical theoretical framework that is still being debated and is unproven. It is a quantum gravity theory which proposes that the fundamental constituents of the universe are vibrating one-dimensional energy ‘strings’ at the sub-atomic level rather than point-like particles. Consistency requires that space-time in string theory has 10 dimensions.

String theory is applied to a number of areas, such as reconciliation of contradictions between the theory of relativity and quantum mechanics, black holes, early universe cosmology, nuclear physics, condensed matter physics, superconductivity, superfluidity, and others.

String theory has evolved additional theories such as Superstring Theory and M-theory. From these the theory a Multiverse consisting of parallel universes has become popular, especially in science fiction, but is unproven.

Plasma Physics and Fusion Energy

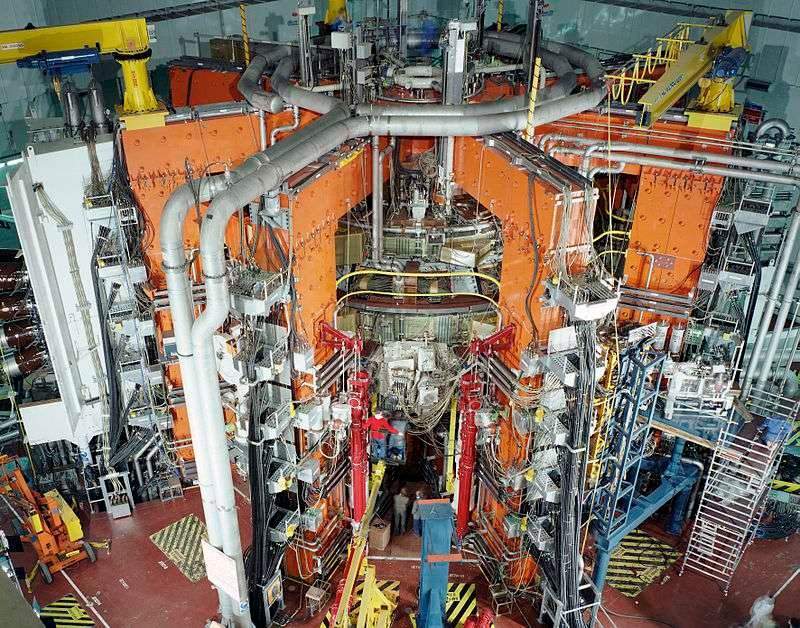

Fusion energy tokamak reactor

Scientists are beginning to master the complex and non-linear science of plasma physics which will enable the development of hydrogen isotope based fusion energy.

Fusion energy will solve all of our energy needs. Today there is a full global development initiative that has made great breakthroughs in recent years. Fusion energy offers unlimited super high energy yield by fusing hydrogen-deuterium isotopes from sea water into helium in a high temperature, high pressure confined plasma. It is safe, low risk, non-radioactive, no nuclear waste, no plutonium, and non-nuclear proliferation risk. The plasma reactors can separate molecular structures to provide abundant critical elements and rare metals. Reaching fusion breakeven involves advanced sciences of complexity, quantum mechanics, nuclear physics, non-linearity, plasma physics, superconductivity, lasers, particle acceleration, magnetohydrodynamics, and more.

According to the U.S. Energy Information Administration, hydrogen fusion will yield 320

billion J/g (Joules per gram) of energy, compared to coal at 27,000 J/g, oil at 46,000 J/g,

and fission nuclear energy at 82 billion J/g.

The International Thermonuclear Experimental Reactor (ITER) focus on confined plasma fusion involves 35 participating nations to jointly develop fusion energy breakeven by 2025 and full fusion power by 2035. The seven primary nations leading the effort are the U.S., Russia, China, India, Japan, South Korea, and the EU. There have also been breakthroughs using high powered laser beams to achieve fusion energy at the National Ignition Facility at the Lawrence Livermore National Laboratory.

Riemannian n-Dimensional Space and Time

Modern science was fundamentally changed by Bernhard Riemann (1826-1866) who replaced the old Euclidean-Newtonian mechanistic model and launched a revolution in mathematics, physics, and science. Riemann demonstrated that the axiomatic assumptions of Euclidean geometry are unjustified and do not accurately portray the real physical universe. He showed that the physical principles of the universe are the only legitimate basis upon which to build geometry; that one must leave the domain of mathematics and go to the domain of experimental physics. He ignited a new mathematical physics that paved the way for all sciences.

Riemann developed a non-Euclidean geometry and theory of a succession of nested n-dimensional manifolds of increasing power (cardinality), n-dimensions beyond 3-dimensional space.

Space is curved:

- Gravitational energy fields generate elliptical geometry that “curves away” like a sphere, where parallel lines meet and triangles have more than 180 degrees.

- Centrifugal energy fields generate hyperbolic geometry that “curves toward” like a cone, where parallel lines meet and triangles have less than 180 degrees.

- A zero energy field is a linear Euclidean geometry, non-curved space, where parallel lines never meet and triangles have 180 degrees.

Riemann provided a basis for Planck’s physics, Einstein’s theory of relativity, Cantor’s transfinite set theory, quantum mechanics, Godel’s incompleteness theorem, Vernadsky’s geobiophysics, and much more. It changed physics, the theory of light, electromagnetism, hydrodynamics, thermodynamics, physiology, biophysics, biology, geology, evolution, chemistry, and more.

Relativity of Space and Time

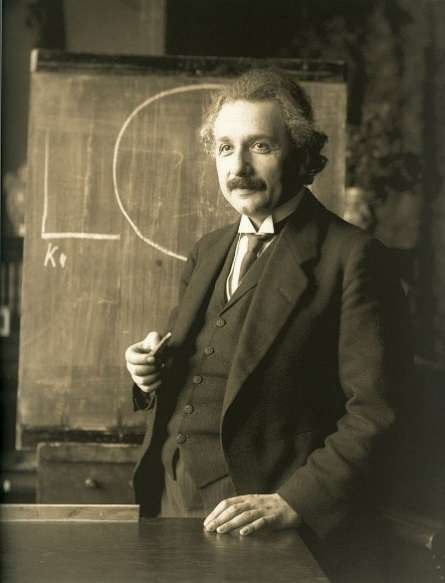

Albert Einstein (1879-1955) transformed the Classical Mechanical physics of Newton and Maxwell, pioneered quantum physics, and applied Riemann’s geometry to develop the theories of relativity, space-time, elliptical gravitational field, and the elliptical geometry of the universe.

Einstein’s Special Theory of Relativity, 1905, reconciled certain problems in Maxwell equations and Newton mechanics. The Special Theory states that the laws of physics are invariant in all inertial frames of reference and that the speed of light is constant. The theory further determines that the universe is not a 3-dimensional Euclidean linear space with absolute time, but rather it is a Riemannian manifold 4-dimensional continuum of interconnected and relative space, time, matter, and motion. It disproved the existence of the aether theory of a matter filling space. Most famous is the equation of mass-energy equivalence, that the energy (E) of a particle is the product of mass (m) with the speed of light (c) squared. The mass-energy relation determines that moving bodies slow in time and shrink in length the closer they get to the speed of light.

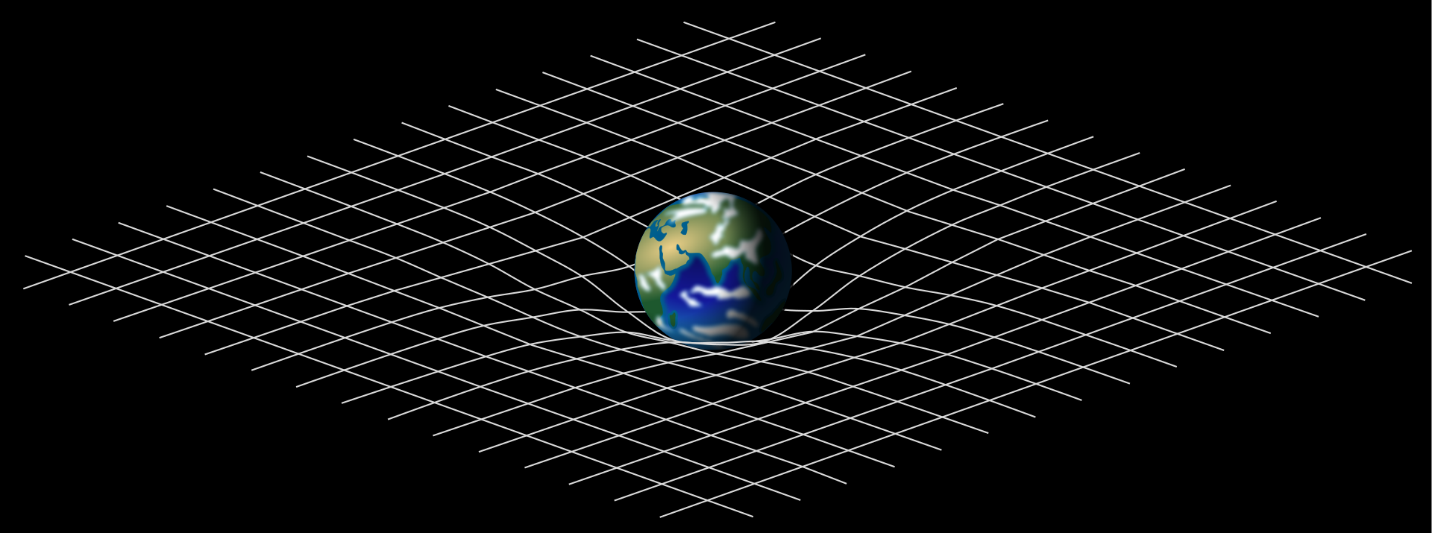

General Theory of Relativity space-time curvature

Einstein’s General Theory of Relativity, 1915, changed the Newton-Maxwell assumption of gravitational attraction with the concept of ‘curved space’, that gravitational motion follows the curvature of space-time geometry. The curvature is generated by a gravitational field that is Riemannian-elliptical and shapes the curvature of space-time, and the orbits of planets and galaxies. The theory also determined that the universe is expanding and that it is finite in limit (infinite relative to us) and bounded by space-time. Other aspects of the theory account for the gravitational deflection of light and time, wormholes, black holes, and gravitational waves.

Mapping the Infinite

Georg Cantor (1845-1918) changed the foundations of mathematics, logic, science, and philosophy. He enabled the development of set theory, theory of numbers, quantum mechanics, nuclear physics, computer science, astrophysics, and more. Cantor applied Riemann’s geometry of nested manifolds to develop Transfinite Numbers and Set Theory. He discovered how to measure and differentiate infinity into a hierarchy of successive infinite sets. Cantor’s Transfinite set theory became the fundamental theory of mathematics.

Cantor defined infinite well-ordered sets and proved that the infinite set of Real Numbers is more numerous than the infinite set of countable natural/ rational numbers. The real numbers have a higher transfinite Cardinal number (power) above the countable rational numbers, the real numbers include both rational, irrational, and complex numbers. The infinite set of real numbers is equivalent to the points on an infinite line, points on an infinite plane, and points in infinite 3D space, which all have the same transfinite Cardinal magnitude (power). Cantor, and others, went on to show that there are numerous infinite sets in a successive hierarchy of Cardinal magnitudes which approach the highest Absolute Infinite.

Quantum Mechanics

In the first half on the 20th Century, quantum physics and quantum mechanics transformed science and became the standard formulation model for atoms, subatomic particles, light, and wave functions. Quantum physics describes the properties and behaviors of molecules, atoms, and subatomic particles. It shows that light and electromagnetic radiation come in discrete energy bundles called quanta.

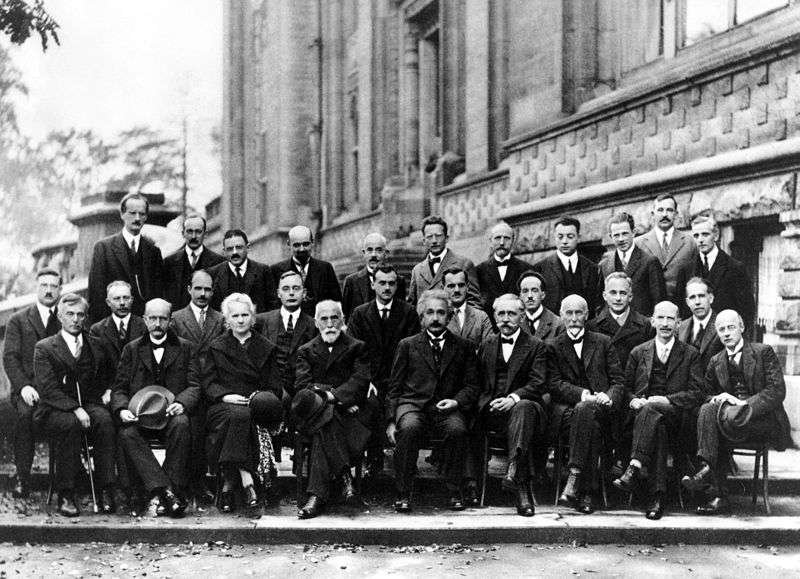

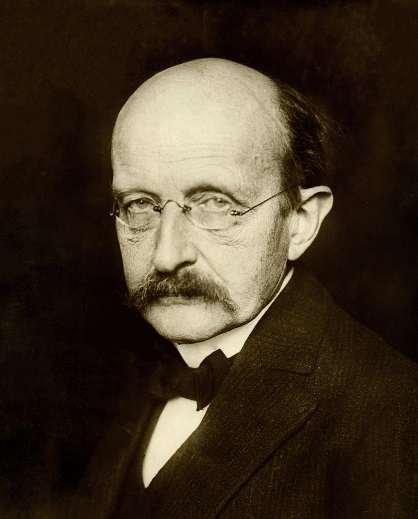

Quantum physics was a collaborative effort developed by Max Planck, Marie Curie, Albert Einstein, Niels Bohr, Erwin Schrodinger, Werner Heisenberg, Max Born, Louis de Broglie, Paul Dirac, Wolfgang Pauli, and others.

Max Planck

Though it seems absurd, spooky, chaotic, irrational, and without reason, quantum physics works. Richard Feynman stated that” If you think you understand quantum mechanics, you don’t understand quantum mechanics.” Niels Bohr stated “If quantum mechanics hasn’t profoundly shacken you, you haven’t understood it yet.”

Quantum mechanics involve many counterintuitive aspects, such as:

- Wave particle duality, where light has both wave and particle properties.

- Entanglement, non-classical nature where particles interact and act the same simultaneously over long distances.

- Superposition, non-classical nature where two or more quantum states are super imposed on each other and will result in both the original states and another state. Superimposed particle combinations can be in all possible states and positions simultaneously.

- Uncertainty of the accuracy of measurement values of particle quantities, physical qualities, position, and momentum. Measurements change once they are observed.

- Quantum tunneling, where a particle that goes up against a potential barrier can cross it, even if the particle has smaller kinetic energy.

- Superfluidity, is a fluid in extreme low temperatures operates in non-linear behavior.

- Antimatter: antiparticles that annihilate their corresponding ‘normal’ particles if they collide.

- Inconsistency with the theory of relativity regarding gravity at the subatomic level.

- Wave function collapse occurs with superimposed particles, where certain states collapse to a single state.

Erwin Schrodinger

Quantum mechanics’ practical applications include: semiconductors, computers, smart phones, transistors, laser, MRI, GPS, telecommunications, secure networks, artificial intelligence, quantum computing, nuclear weapons, optics, electron microscopes, and many more.

Quantum Computers

Over the last few decades a Second Quantum Revolution has brought about the development of Quantum Computers (QC) which are supercomputers that will revolutionize computing, analysis, and complex problem solving. They are becoming more tangible every day with the work being developed by many high-tech companies.

QC has a different architecture, instead of silicon based processors it uses superconductivity circuits and trapped atomic ions. Quantum computers use the principles of quantum mechanical phenomena at the atomic and subatomic level to tackle extremely difficult tasks that ordinary computers cannot perform.

Quantum Superposition: two or more quantum particles or states are superimposed on each other. Superimposed particle combinations can be in all possible states and positions simultaneously. This allows performing multiple tasks at the same time.

Quantum Entanglement: particles correlate and interact with each other, acting the same simultaneously at a distance, to perform computing. Qubits can interact at a distance.

QC use quantum bits, or qubits, that are the basic unit of memory that use an orientation of a photon of light or the rotation of an electron. A classical computer encodes data in binary bits as 0s or 1s in only one of four possible states, being either 01, 00, 10, or 11. With quantum superposition the qubit can use all four states simultaneously.

Quantum computers have capabilities far beyond current computers. QC are 1,000 times faster and can perform more complex calculations, more multiple functions simultaneously, and process larger amounts of data. They reduce problem solving from days or hours to seconds. In chess, a traditional computer examines 200 million moves per second, where quantum computers examine one trillion moves per second.

Quantum computing applications: computational, simulation and models, particle physics, cybersecurity and cryptology, healthcare, financial modeling and forecasting, artificial intelligence, weather forecasting, and more.

Antimatter Energy

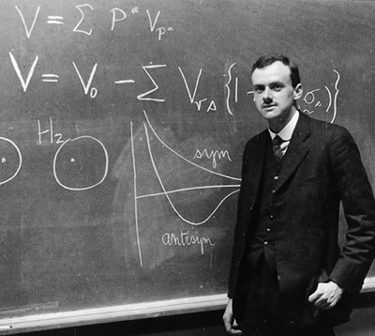

Paul Dirac

Antimatter energy featured on the Star Trek TV series is real. It is composed of anti-particles which bind together. Antimatter is generated from particle acceleration, cosmic ray collisions, and some types of radioactive decay.

Antimatter was discovered by Paul Dirac in 1928 and later Carl Anderson made more discoveries in 1932. Richard Feynman and Ernest Stueklberg proposed that antimatter and antiparticles are regular particles that travel backward in time.

A particle and its corresponding anti-particle have the same mass but different electrical charge. For example, a proton is positive and an anti-proton has negative charge. A collision between particles and anti-particles lends to their mutual annihilation, giving off high levels of energy, such as photons, gamma rays, neutrinos, and others.

According to the U.S. Energy Information Administration, antimatter has an energy density of 90 trillion Joules per gram (J/g) compared to 82 billion J/g for fission nuclear energy and 320 billion J/g for fusion energy.

Antimatter will also provide fuel for high speed interplanetary and intersteller space travel in the form of antimatter catalyzed nuclear pulse propulsion. This will give spaceships a higher thrust-to-weight ratio. We will be able to travel to Mars in only a few months time.

Antimatter has numerous applications in sensors, diagnostics, medical imaging, cancer treatment, and making stronger materials in construction and manufacturing.

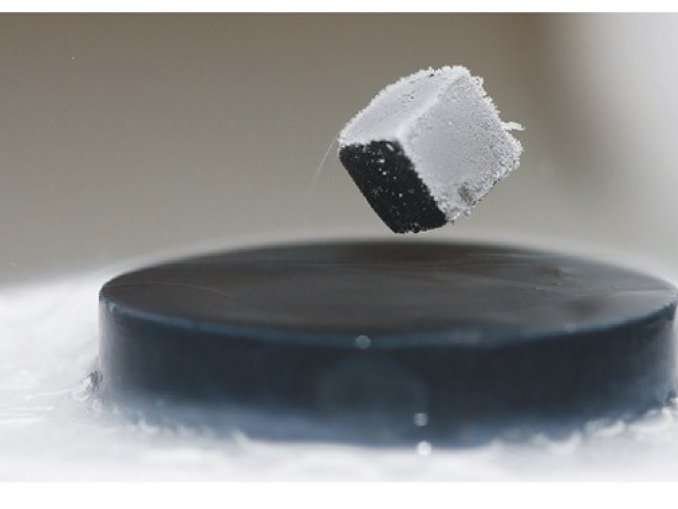

Superconductivity

Superconductivity is a non-linear phenomena resulting from quantum mechanical processes. It is generated in extreme low temperature (at liquid nitrogen) superconductors. Electrical energy is transferred with no electrical resistance or magnetic flux fields, thus resulting in no loss of energy to heat. An electric current through a loop of superconductivity wire can persist indefinitely with no power source. The science is not fully understood as yet.

Uses include: quantum computers, plasma physics, fusion energy, electric power transmission, power storage, particle accelerators, particle detectors, generators, electric motors, transformers, electromagnets, ore sorting, wind turbines, digital circuits, nanowire, cryogenics, smart grids, maglev trains, MRI, telecommunications, mobile phones, and others.

Nanotechnology

Nanotechnology, or Nanotech, is revolutionizing the technical, industrial, and medical sectors. Nanotechnology is applied quantum mechanics, manipulating atoms and molecules for fabrication of molecular structures, nanotubes, assemblies, materials, sensors, electronics, and devices. The National Nanotechnology Initiative defines nanotech as the manipulation of matter with at least one dimension sized from 1 to 100 nanometers (a nanometer is one billionth of a meter). Nanotech utilizes almost all physical sciences and is applicable in high tech, information technology, electronics, defense, security, biotech, medicine, transportation, energy, food, and environmental science.

The U.S. National Nanotechnology Initiative was launched in 2000 by a coordinated multiple federal government agencies and departments to support and foster nanotech scientific research and development in the private sector and education.

Nanotech makes materials stronger, lighter, and more durable. Extremely small nanoscale devices can provide sensors, monitors, and conduct repairs on the molecular scale.

Self-Organizing Universe

Vladimir Vernadsky

Vladimir Vernadsky (1863-1945) was a prominent Russian scientist. Western science is slowly accepting his theories, most of his works are not translated. Vernadsky is the ‘father’ of environmental science, geobiology, biochemistry, geochemistry, biogeochemistry, and radiogeology. He developed the concept of the biosphere. He stated that his theories are based on the Riemann and Einstein geometric space-time manifold model of a dynamic, self-organizing universe.

To Vernadsky, evolution is not random as Darwin and Oparin claim, rather evolution is a “determined direction” of physical and living processes. The three principles of physical laws, life, and cognition exist simultaneously since the beginning of the universe. Life is living matter. Human reason is a physical force that changes the planet and changes the laws of the universe.

Vernadsky’s three phase space-time Riemannian higher ordered manifolds:

- Geosphere: the evolving abiotic non-living domain which is driven by the principle of universal physical laws. The geosphere is a dynamic, self-developing, complex system which strives to develop conditions for life.

- Biosphere: driven by the principle of life. The Biosphere is an integrated, dynamic and self-evolving complex system made of “living matter”. It determines and transforms the Geosphere, strives to create the conditions of cognition.

- Noosphere: driven by the principle of cognition, is the dynamic, self-developing human social-economic system. It determines and transforms the Biosphere. Human reason is a physical force that changes the planet and strives to higher ordered creativity.

“Living matter gives the biosphere an extraordinary character, unique in the universe… Cosmic energy determines the pressure of life that can be regarded as the transmission of solar energy to the Earth’s surface… Activated by radiation, the matter of the biosphere collects and redistributes solar energy, and converts it ultimately into free energy capable of doing work on Earth…A new character is imparted to the planet by this powerful cosmic force. The radiations that pour upon the Earth cause the biosphere to take on properties unknown to lifeless planetary surfaces, and thus transform the face of the Earth… In its life, its death, and its decomposition an organism circulates its atoms through the biosphere over and over again.”

Vladimir Vernadsky, Biosfera, 1926

Stuart Kauffman

Biologist Stuart Kauffman is a leading proponent of self-organizing biological systems. He states that Darwin’s natural selection in evolution helps the biological system to adapt more successfully and develop higher ordered energy throughput and structure in the system. Kauffman works with the Santa Fe Institute researching the self-organization of open complex systems.

Origin of Life

Stanley Miller

In 1953 at the University of Chicago, Stanley Miller carried out a chemical experiment to demonstrate how organic molecules could have formed spontaneously from inorganic precursors under prebiotic conditions. It used a mixture of gases, methane, ammonia, hydrogen, and water vapor, sparked by electricity, to form simple organic monomers of amino acids, the building blocks of protein. This experiment set the stage for what has become the dominant scientific paradigm of how life began on the planet.

The idea that life originated from non-living matter was proposed by Charles Darwin in 1871. The Russian scientist Alexander Oparin in 1924 proposed that the first molecules constituting the earliest cells slowly self-organized from a primordial soup. He later published his work in his book Origin of Life in 1936.

Plate Tectonics

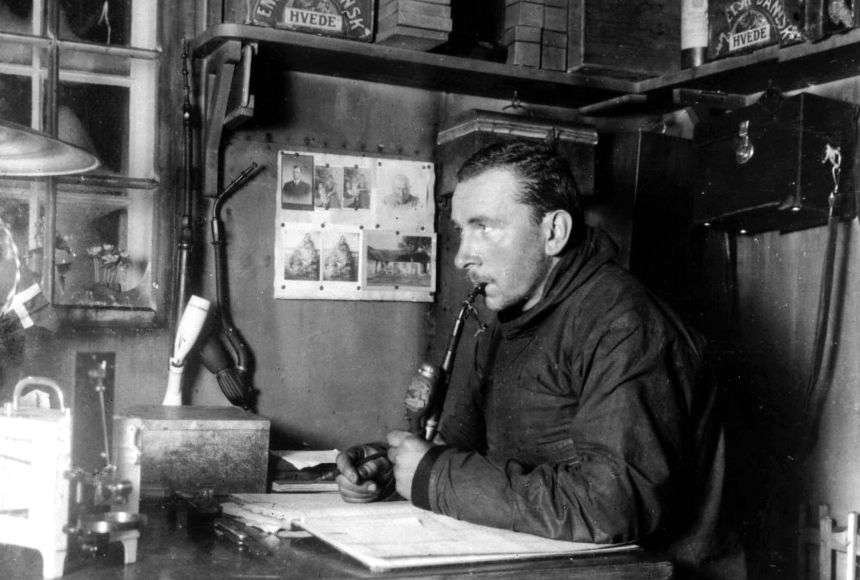

Alfred Wegener

German scientist Alfred Wegener (1880 –1930) is primarily known as the originator of the continental drift hypothesis by suggesting in 1912 that the continents are slowly drifting around the Earth. His hypothesis was controversial and widely rejected by mainstream geology until the 1950s, when numerous discoveries such as palaeomagnetism provided strong support for continental drift, and thereby a substantial basis for today’s model of plate tectonics.

Plate tectonics is the generally accepted scientific theory today that considers the Earth‘s lithosphere to comprise of seven or eight large tectonic plates which have been slowly moving since about 3.4 billion years ago. The model builds on the concept of continental drift. Plate tectonics came to be generally accepted by geoscientists after seafloor spreading was validated in the mid to late 1960s.

Mathematics Is Not Logic

Kurt Godel (1906-1978) revolutionized mathematics, logic, set theory, science, physics, quantum mechanics, philosophy, computer science, artificial intelligence, and other areas. Godel rigorously proved that mathematics is open ended and not complete. He showed that science is more than formal axiomatic mathematics; that the universe does not operate with formal linear mathematics; and that no formal set of rules can fully describe the universe. The human mind is not a formal logical system.

Godel and Einstein at Princeton Institute

In 1931 Godel published his two Incompleteness Theorems to disprove the logical positivists Bertrand Russell, Rudolf Carnap, David Hilbert, Ludwig Wittgenstein, and others who were trying to frame “the complete set of human knowledge into a formal logical system.” He proved that any formal axiomatic system, such as logic and mathematics, cannot be both consistent (without contradictions) and complete (has a proof for everything), it cannot prove or disprove its own validity and axioms. Formal systems are not complete, there will always be things that are true but cannot be proven within the system, or problems it cannot solve or answer.

DNA

Watson, Crick, Wilkins, Franklin

The year 1953 was a triumphal benchmark for biology. James Watson, Francis Crick and Maurice Wilkins unraveled and developed a model of the double-helix geometric structure of DNA. The discovery was much publicized and they were awarded the Nobel Prize in 1962. Rosalind French was unrecognized for her work that led to the discovery because the Nobel was not awarded posthumously. DNA (deoxyribonucleic acid) is a bio-polymer which carries genetic instructions for the development, growth, and reproduction of living organisms.

Nucleic acid, DNA, and RNA and their properties were discovered in the 19th Century. In 1938 Astbury and Bell published the first X-ray diffraction pattern of DNA. In 1944 the Avery–MacLeod–McCarty experiment showed that DNA is the carrier of genetic information.

The Human Genome Project was jointly researched by many institutions in the U.S. UK, France, Germany, Japan, China, and Russia from 1990 to 2003, with additional work up to 2021. The project was able to determine the base pairing that makes up human DNA, and the identifying, mapping, and sequencing all the genes of the human genome.

Experimental studies of nucleic acids constitute a major part of modern biological and medical research, form a foundation for genome and forensic science, gene therapy, genetic engineering, bio-nanotechnology, agriculture, and the biotechnology and pharmaceutical industries.

Quantum Consciousness

Roger Penrose

Quantum consciousness is a hypothetical theory that consciousness cannot be explained by classical mechanics. Theorists suggest that consciousness may be found in quantum mechanic functions such as superposition, entanglement, quantum holography, wave function collapse, and others areas. That these quantum processes correlate and regulate neural synaptic and membrane activity.

Roger Penrose and Stuart Hameroff hypothesize that quantum wave function collapse provides a basis from which consciousness derives. They further suggest that neural microtubules are hosts for quantum behavior. Other scientists who have posed quantum consciousness theories include Erwin Schrodinger, David Bohn, David Pearce, Karl Pribram, Henry Stapp, Walter Freeman, Yu Feng, and Airoumi Umeza.

Artificial Intelligence

Artificial Intelligence (AI) provides computer thinking systems which offer automated processing, analysis, presentation, comprehension, and approximate conclusions of complex data for science, industry, finance, government, military, healthcare, infrastructure, and other areas.

Artificial Intelligence is computer simulated thinking and problem solving. The machine is programmed to perceive its environment and take action to achieve its goals. AI uses machine learning algorithms and software to replicate or mimic human cognition characteristics and thinking processes. It recognizes patterns in behaviors and creates its own logic. The algorithms learn exclusively from the input data and from experience, then it does what it is programmed to do. Algorithms can predict sequences, but offer no comprehension of the logic behind its decisions.

AI does not, and cannot, fully function as the human mind. According to computer science and artificial intelligence pioneer Alan Turing, “Computers are limited to algorithms, humans can do non-algorithmic computable things. Computers are fast but not more intelligent.” It is a mistake to attribute understanding and creativity to mechanical algorithmic machines.

Artificial Intelligence uses several cognitive applications, such as: Machine Learning, Natural Language Processing, Optical Character Recognition, Computer Vision, Artificial Neural Network, Robotic Process Automation, Self-Reconfiguring Modular Robotics, and Swarm Robotics. Artificial Intelligence has applications in computers, robotics, nanotechnology, smart materials, medical, security, and many others.

References

Publications: 21st Century, Astronomy, Discover, New Scientist, Science, Science Alert, Science News, Scientific American, Smithsonian, National Geographic, Psychology Today

Institutions: Atomic Energy Commission, Bell Labs, CalTech, Columbia University, Department of Energy, Harvard, ITER, Lawrence Livermore Labs, , Los Alamos Labs, MIT, Nanotechnology Initiative, NASA, Oxford University, Princeton University, Santa Fe Institute, Stanford University

Books

- The Biosphere and Noosphere, Vladimir Vernadsky, 1945.

- A Brief History of Time, Stephen Hawking, Bantam, NYC, 1988.

- The Emperor’s New Mind, Roger Penrose, Oxford, 1989.

- The Fabric of the Universe: Space, Time, and the Texture of Reality, Brian Greene, Knopf, NYC, 2004.

- Four Lectures on Relativity and Space, Charles Steinmetz, 1923

- Geometry, Relativity and the Fourth Dimension, Rudy Rucker, 1977

- Infinity and the Mind, Rudy Rucker, Birkhauser, Boston, 1992.

- Humanity in a Creative Universe, Stuart Kaufman, Oxford, 2016.

- The Origins of Order: Self-Organization and Selection in Evolution, Stuart Kaufman, Oxford, 1993.

- Paradigm’s Lost: Images of Man in the Mirror of Science, John Casti, Wm. Morrow, NY, 1989.

- Parallel Worlds: A Journal Through Creation, Higher Dimension, and the Future of the Cosmos, Michio Kaku, Doubleday, NYC, 2004.

- Physics of the Future, Michio Kaku, Doubleday, NYC, 2011.

- The Structure of Scientific Revolutions, Thomas Kuhn, University of Chicago, 1962.

- The Universe In a Nutshell, Stephen Hawkins, Bantam, NYC, 2001.